The Three AI Tensions Breaking Product Management

Or: Why Your AI Agents Keep Failing and What to Do About It

You are reading You Are The Product, the highly irregular newsletter for aspiring product leaders. I’m Mirza, an AI Product Leader building autonomous AI Agents at Zendesk, and you should question everything I claim or at least ask AI to confirm it.

Let me ask you something. Have you built an AI agent in the last six months?

If yes, did it actually work the way you wanted?

And if it did, are you still using it today?

When I spoke to ~100 product managers at the ProductLab Conf in Berlin recently, only about 10% could answer yes to all three questions. We're all experimenting, failing, learning — and mostly failing again.

The promise was that agents would come in, take over, and run the show. But in reality agents mess up. Handovers fail. Latency kills user experience. We're facing the same issues in both our personal experiments and enterprise implementations.

And it's fundamentally changing what it means to be a product manager.

What We Talk About When We Talk About Agents

Let's start with clarity, because the industry loves throwing around terms like "autonomous agents," "LLM workflows," and "agentic systems.”

An agent is a goal-oriented system that can reason and act with autonomy. It can plan, act, and loop. It has dynamic decision-making ability — using various tools and applying reasoning to solve problems. Think of booking a trip to Thailand autonomously: the agent needs to reason through multiple steps, make decisions, and use various booking systems and platforms.

Most importantly, an AI agent can adapt in real-time to changing context and user needs. A user can switch languages mid-conversation or pivot to an entirely different topic, and just like a human would, the agent adapts.

An agentic system is the workflow where these AI agents interact with each other, plan, make decisions, use tools, and adapt dynamically.

The Three Fundamental Tensions

I've been leading the AI agents product org at Zendesk — meaning my team and I live and breathe these systems daily, so much so that sometimes I wonder if we’re not stuck in the AI bubble. Through this work, I've learned of three fundamental tensions that are breaking everything we know about product management.

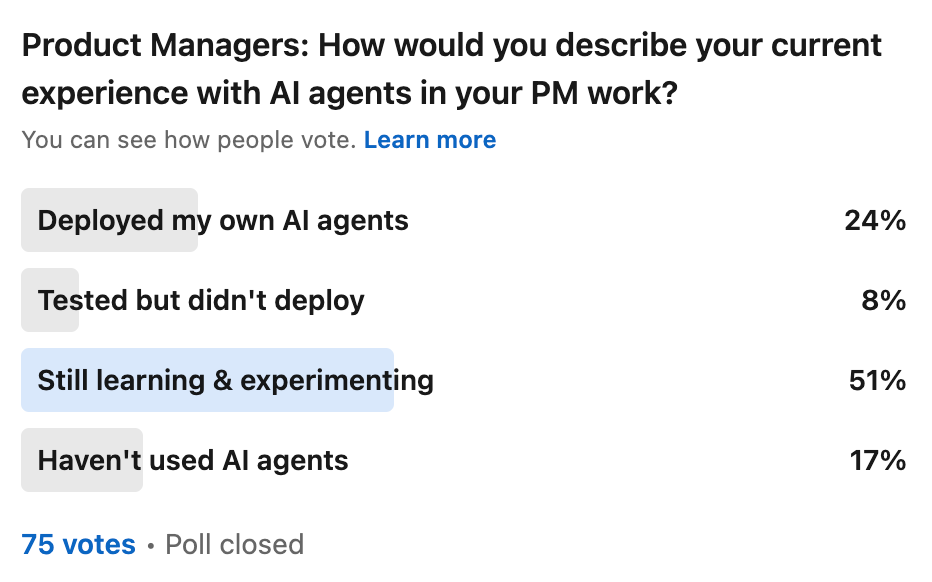

1. The Control Paradox

Users say they want control. They want to train the bot, shape how the system behaves, be part of the process. But they don't want any complexity. They don't actually want to do the work.

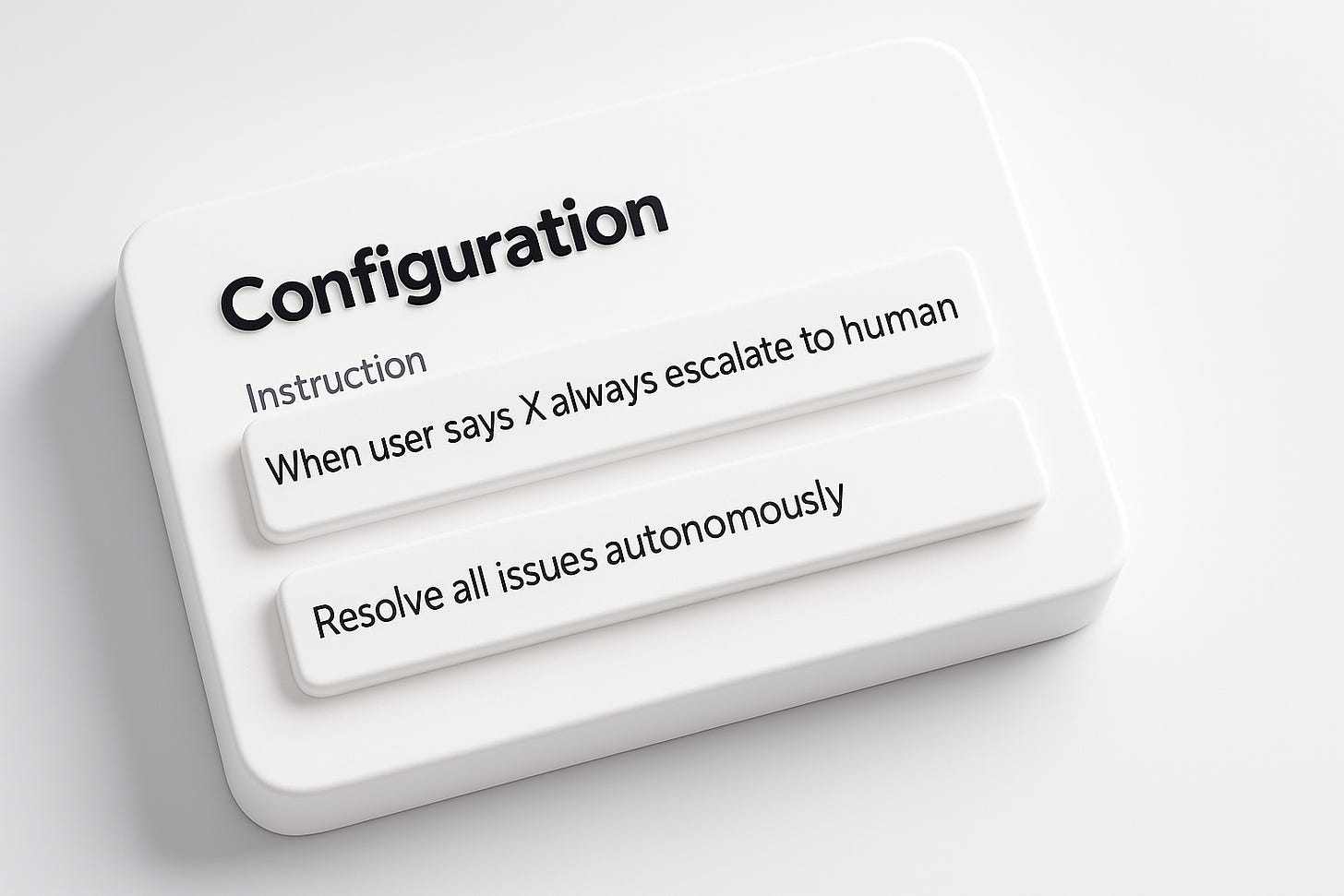

At Zendesk, we give users the ability to create what we call "instructions" — strings of text they can inject into the system's behavior. Here's what they’ll write:

Instruction 1: "When a customer says X, always escalate to a human agent."

Instruction 2 (immediately below): "But resolve everything autonomously."

This contradiction might seem banal, but it reveals something profound: Most users want the illusion of control, not actual system effectiveness.

Individual feedback from supervisors who don't understand the larger system creates local conflicts and contradictions. The solution isn't more control panels or opportunities to mimic control. It's about designing touchpoints that preserve user agency without breaking the underlying system.

What does this look like in practice?

Binary signals: We need users to validate what the AI creates because human judgment is still best 👍 / 👎

Evaluation layers: Not every piece of feedback should modify system behavior — we need to understand how feedback fits into the larger picture

Three-layered architecture: Aggregate analytics for the big picture, drill-downs for specifics, and opportunities for qualitative feedback with conflict detection

2. The Orchestration Trap

What most of us in the space are learning about AI agents: specialized agents perform better. But every specialization increases complexity exponentially.

More handoffs between agents means more latency. And if you've ever waited 10, 15, or 20 seconds for a chatbot response, you know users are incredibly sensitive to this. We expect AI to respond with human-like speed. Humans have notoriously low latency, and we expect the same of our machine systems.

More agents also means more failure points. When one agent can't update or fails, the ripple effects are unpredictable. The more complex the system, the harder it becomes to track what happened and why.

Most teams start with two or three autonomous agents — you typically need at least some kind of intent classifier agent to understand your user and perhaps a RAG agent for knowledge retrieval and response generation. We're now at nearly a dozen, and they're becoming so specialized that their names barely translate to human language anymore — they're doing tiny, specific things that only make sense in the context of the larger orchestration.

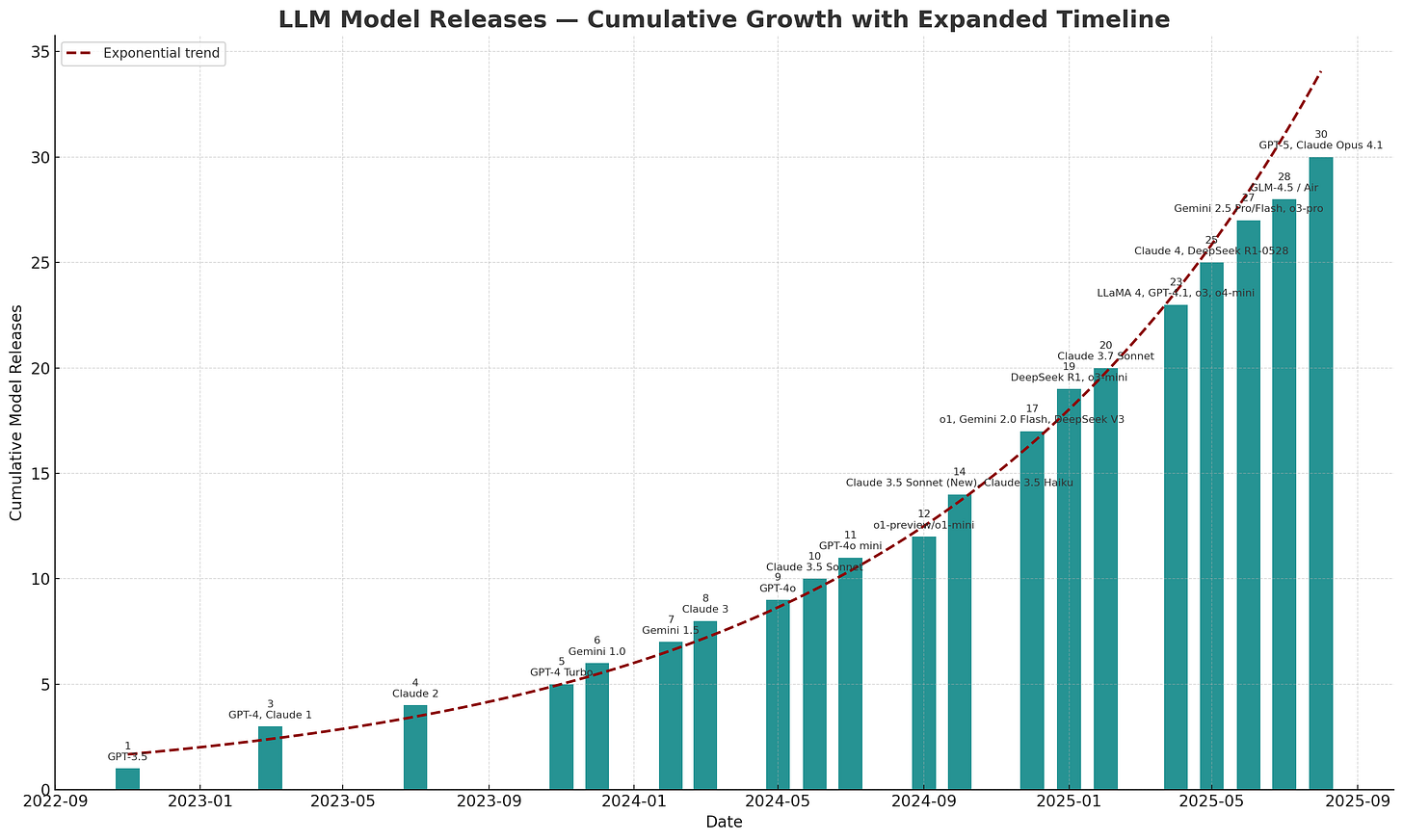

Even worse, we're essentially rebuilding everything every three months. The foundation models keep evolving. Your prompts break when models update. Performance shifts. You're building stable systems on fundamentally unstable foundations.

But here's where it gets interesting: We're not just creating product interfaces for humans anymore. We're creating digital workspaces for AI agents to collaborate with one another.

Think of it like the Spotify model with product tribes, but for agents. You have domains, teams within domains, orchestrators for each domain, and a meta-orchestrator on top. It sounds clean in theory. In practice? Agents don't always belong in their assigned domain. Sometimes they need to jump between contexts. The pain is real, and we're all feeling it.

3. The Evolution Crisis

Teams are starting to split along new lines: those working with AI and those who aren't.

AI research cycles don't correspond to traditional product planning. Something might take six days or six months — that's the nature of probabilistic systems. You can't write an upfront spec (though you never should have been doing that anyway).

Should teams building agentic products stop building user-facing features? Should the autonomous agents be building features instead? These aren't rhetorical questions — they're decisions the tech industry is making right now.

What's changing:

PRDs → Prototypes and context architecture

Feature specs → Agent instructions and prompt engineering

User stories → Agent capabilities and interaction patterns

Sprint planning → Research cycles and hypothesis testing

Launch planning → Continuous orchestration

And a new skill is emerging: evals

Planning has always been hypothesis testing, but AI makes it impossible to pretend otherwise.

The New PM Toolkit

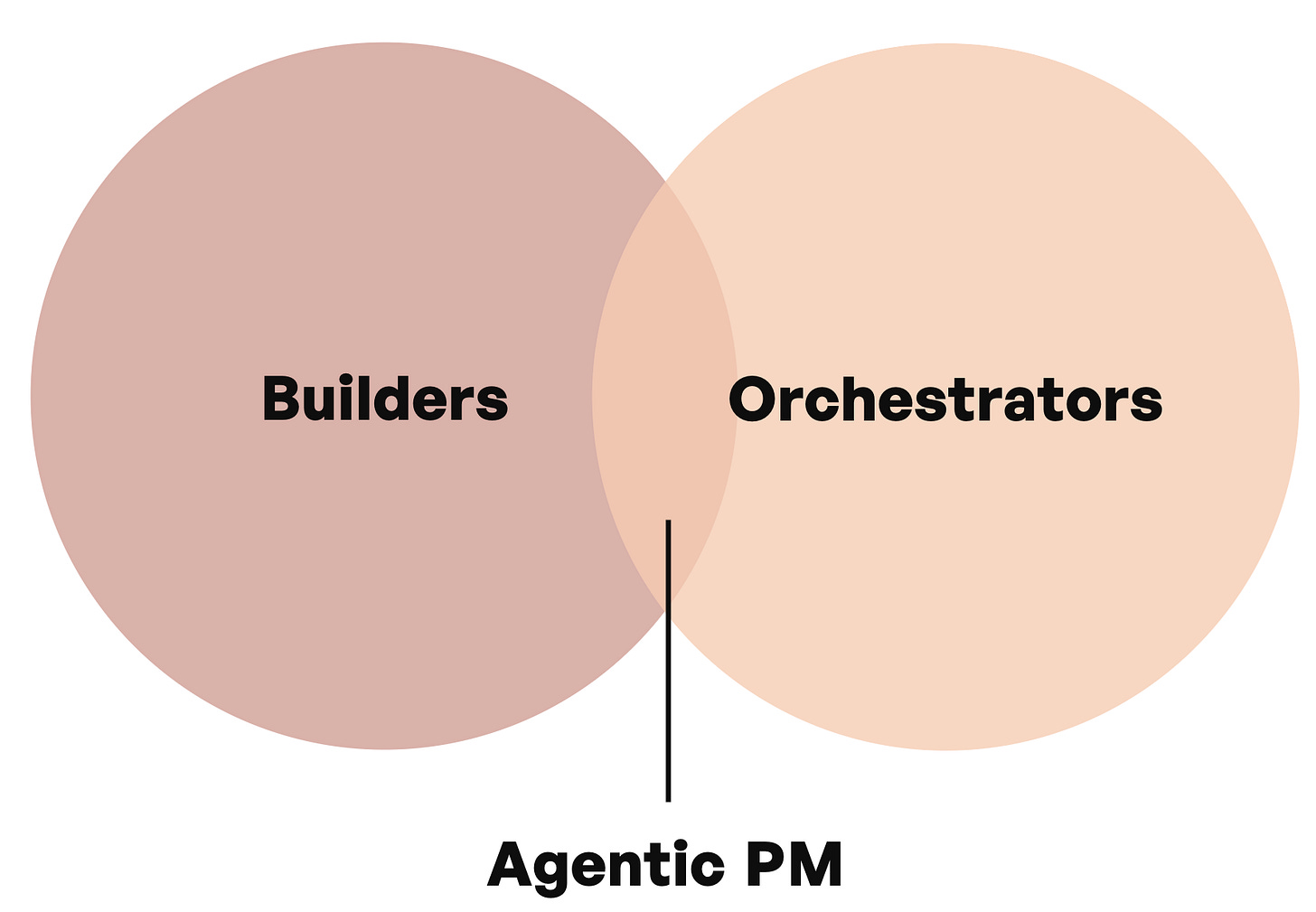

The PMs who will thrive in this world are builders and orchestrators. No, not everyone needs to become technical. We still need strategy, market understanding, and all the classic PM responsibilities. These don't go away — they become more critical because AI systems can't replace human judgment.

But you need to experiment personally and find opportunities to architect at scale. The new AI PM is part builder, part orchestrator — someone who creates agents for themselves, documents what breaks and why, and shares learnings publicly.

Here's your roadmap:

This Week:

Map one workflow (personal or professional) as agent interactions

Identify one human touchpoint where you could simplify

This Month:

Prototype instead of writing a PRD

Run a research cycle instead of a sprint

Share your learnings publicly

Ongoing:

Learn to write evals (this will be the #1 skill tested in interviews next year)

Design agent interaction patterns by chaining agents and watching them break

Create transparency and explainability in your products

Document everything

The Bottom Line

None of us have this figured out. But community is our competitive advantage. Forums, conferences, newsletters like this — these are where we exchange experiences and learn together.

The question isn't whether you'll become an orchestrator. It's whether you'll do it intentionally and learn along the way.

We're evolving from feature builders to orchestrators of human-machine collaboration. We're deploying agents to solve human problems and business outcomes. And we're doing it all on shifting foundations that change every three months.

It's messy. It's uncertain. And it's exactly where product management needs to be.

What's your experience building AI agents? What's worked, what's failed spectacularly? Hit reply and let me know — I read every email.

And if you're looking to break into AI product leadership, remember: experiment personally, share publicly, and focus on the orchestration patterns that will define the next era of product management.